It took another round of “longer than expected” because, while I passed my defense at the end of July, I had some requests to make significant changes to the dissertation before submitting my final copy, and that didn’t get wrapped up until early September with my advisor paying meaningful attention to what I was doing for the first time in years.

I ended up having to include a lot of the modern academic hype-spewing, stake-claiming bullshit I’ve developed a deep distaste for, but even with that I’ll admit it’s a much better document overall after the rewrite. It looks less negative than the earlier drafts, because they were largely written before my last “I give up, I’ll try one more stupid thing” experiment finally turned up something reasonably compelling.

The copy of record of the dissertation is published, here are local PDF copies of the deck from my defense and my dissertation. More information below.

Then I had several weeks of catch-up for all the many things in my life I’ve been shorting time to get done, while I was having the apparently requisite back-and-forth with the graduate school about petty formatting matters, but it is done.

The Nice Pop-Academic Pitch

The basic gist of the work is that we typically operate digital cameras in a way that was derived from the behavior of film, forcing decisions on exposure parameters to be made uniformly for the whole scene and no later than the time of capture, but if we design native digital cameras that aren’t just film camera simulacra, we can do better.

Which would be absolutely impossible to create by any conventional means.

Some TDCI work I got involved in about a decade ago suggests that it’s possible to build cameras that sample the scene in a different way that builds up a model of the incident light at each sensel over time – late in the process I started generically calling those an “Image Evolution Model” (IMEV) – then computationally integrate that light later.

Starting from there, the headline artifact a ridiculously powerful exposure tool – called NUTIK – I built that let you make arbitrary choices to not just the interval(s) of integration but the gain during that time, and make those choices differently for different parts of a scene, and do it all after-capture, as many times as you want.

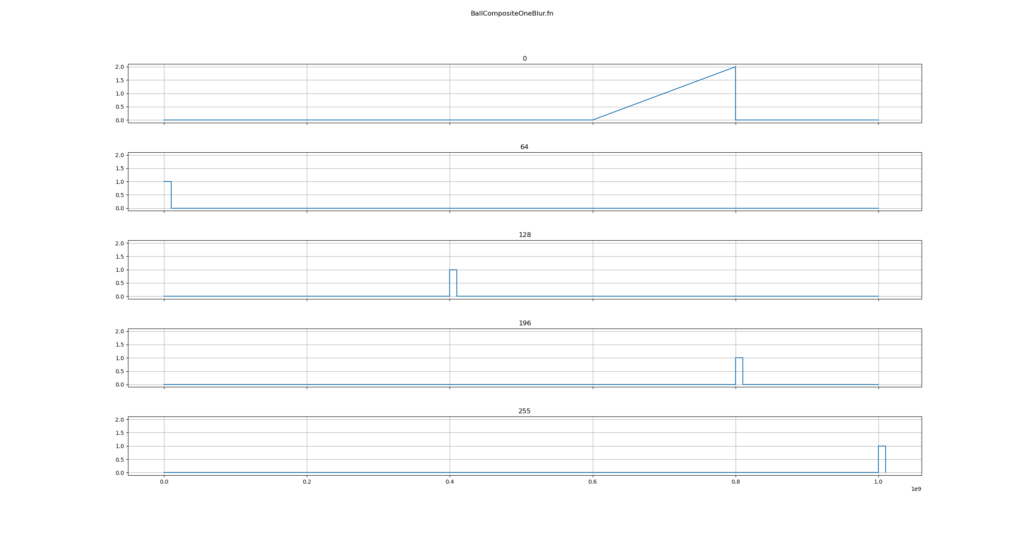

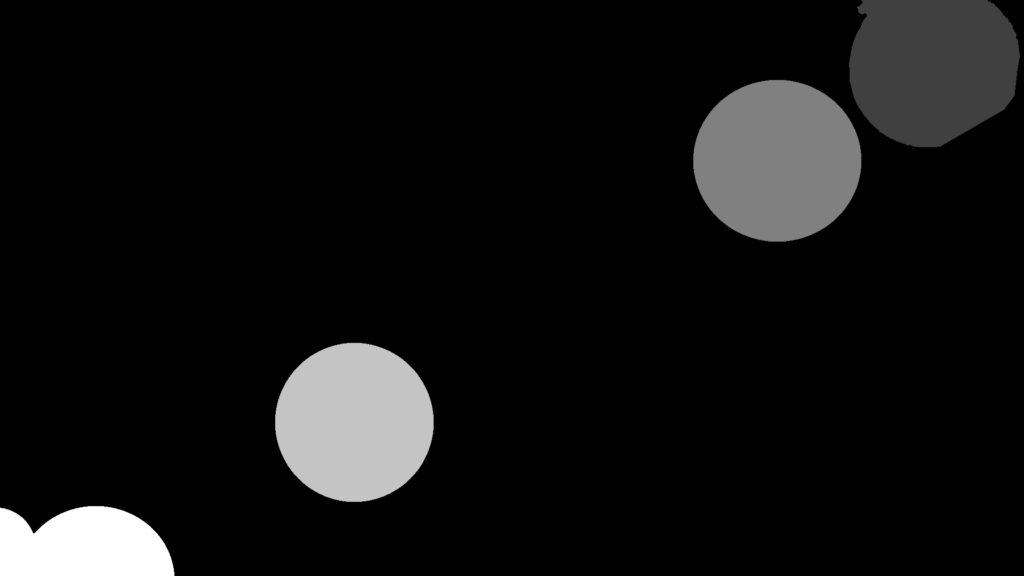

Under this model, you have complete post-capture functional control of how the record of the scene content gets integrated into an output image. It supports absurdities that no one else had spotted were enabled by the model, like negative gain (“Remove the contribution of light during this interval”), time-varying gain (“Ramp the ISO during the exposure”).

Each function was adjusted repeatedly in software until the desired effect was achieved.

I also spent a considerable amount of time hacking my way into some mirrorless cameras internals to see if I could build a better capture device for creating IMEVs by software changes alone. It turns out that it’s not directly possible because some of those film-simulacra assumptions are baked really deeply into the camera stack, in ways that an informed guess wouldn’t suggest. That work felt like perservation for a long time because I couldn’t build the thing I had in my head, but the details are interesting to the point of being independently publishable; here’s a section about Magic Lantern and the internals of a Canon EOS M I spun out as a paper at EI’24.

Being me, there is a bunch of history-of-technology type work threaded throughout, because as far as I’m concerned the context is always the most important part. That includes comparisons to some other ways of deferring photographic parameter decisions (like Plenoptic Cameras), and a bunch of theory-spinning about the concepts for imaging systems that work in a capture-then-integrate mode. Quite a bit of work about desirable properties (eg. it turns out uniform sampling is generally undesirable for this sort of thing, so there’s a thread of work thinking through non-uniform sampling), and the start of mathematical abstractions for the model. Once you start thinking about photography as the process of using photons as a sampling mechanism to determine scene content, it all turns in to horrifying things that smell like calculus over random variables. I set up the argument on that, but formalizing it will be someone else’s problem; my advisor and I are both much more taken with empirical models than mathematical elegance that doesn’t precisely correspond to reality.

And now some less academic talk

I’ll preface by saying the work I earned my PhD for is, at very least, not untrue, and easy to argue offers a substantial theoretical method-and-model improvement to accomplish a variety of photographic capture and post-processing tasks.

How much I believe it’s really going to matter is …debatable… but anyone claiming their research is the ultimate correct answer that will take over the world is to be regarded as so full of shit it’s coming out of their face, so take my qualified approval as a sign of good work.

And that is where I ran into some problems; about a decade ago I was reading a lot of academic work, and doing a lot of editing for other people’s academic papers on projects where I was already involved for experimental-apparatus reasons but had no stake in the results. I would get pulled back in to edit a disaster of a paper on a decent piece of work, and I got very good at spewing appealing academic writing.

We won awards for papers I expected to get in trouble for editing into almost a mockery of academic writing.

I fully realized the degree of manipulative, stake-claiming behaviors that pretend to add context but have fully devolved into meaningless games about citation counts, and the general combination of pretension and objectively mediocre writing the modern academic style demands.

…And that thoroughly turned me off to the style.

And this kind of broke me.

So much of my identity is based on the premise that academia is a legitimate enterprise, and it was not nice to realize how much of it is an empty prestige game that sometimes, incidentally, produces research.

I still wanted to work sort-of in academia; I think one of society’s pressing issues is that we’re not producing enough, good enough experts to stay ahead of technical debt and maintain our technological society, and I’m extremely well-placed to spend much of my life tackling that problem: producing more, more practical, more thoughtful, and more skilled electrical and computer engineers.

But I have to have higher degrees from a research system I no longer hold much esteem for to make a living doing so, so I had to see this thing through.

My advisor – who I really do like personally and have to give a lot of credit for putting up with me and my attitude – was sort of a terrible advisor.

Getting him to focus on work that would actually get me a credential was like pilling a cat.

For example, at the beginning of the Fall’23 semester, I made it known that I scheduled out about 5 chunks of time for deep work to spend on things for my PhD around my way-more-than-half-time “Half time” instructor job, and I didn’t want to get involved with any conferences that would consume them. Then, within weeks, he pulled me into helping with hosting LCPC. Then I got pulled into helping with an unrelated project in the research group. Then he pulled into designing a new demo for Supercomputing. And then I got pulled into going to Supercomputing. And then I got COVID from the trip. And … oops, that was all my large chunks of time for deep work – why is this degree taking so long?

And, as it turns out, several times where I thought he was paying attention, he wasn’t, so I caught flack for things in my document that I’d tried to run by him and got an “OK, sure” about.

I knew this was a risk because I did my MSEE under him, and I’d carefully picked a cochair who would take care of that kind of thing… who then retired, leaving me alarmingly unsupervised while I was wrapping up.

Apparently the unusually and uncomfortably long closed discussion after my defense consisted of a significant amount of other committee members – who I totally accept my share of the blame for not sufficiently keeping in the loop – dragging my advisor to the tune of “Have you been supervising him at all?!” – Reader, he had in fact, generally speaking, not.

To spread blame around, I’d also like to present several of the most odious questions I received from committee members during my defense, and the answers I would have given if these people didn’t have institutional power over me at the time. Names removed to protect the guilty.

CM: “I’m surprised you didn’t talk about AI/ML, because that’s what we’re all working on now.”

Real Answer: That’s intentional, I certainly fucking hope we’re not “all” working on AI, this AI hype cycle is mostly bullshit and we’re years overdue for another AI winter.

Just like the last two AI booms, and the map-reduce hype cycle a decade ago, and a dozen other examples, we’re eventually going to characterize the relatively narrow set of things that these very large k-token unsupervised stochastic models are good for, we’re going to figure out why they’re good at those things, and AI winter will set in again.

Everyone still hyping it is either a scammer or a mark – which are you?

Also, calling every vaguely heuristic or non-deterministic algorithm “AI” is ridiculous.

Provided Answer: Well, the application of AI to this would be building ON TOP of my synthesis tools, to automatically generate masks and functions and such.

CM: “I see your list of publications, how many of them are at IEEE or ACM venues?”

Actual answer: …Yeah, I’m not going to feel any shame about the venues I published in, not only because I picked suitable venues based on the topic, but because I think the entire academic publishing industry is a vapid prestige game rife with fraud. We could stop submitting, stop reviewing, and put the major publishers into archive-only tomorrow and nothing of value would be lost. We might even start doing research instead of just constantly hustling for the epeen contest.

Provided Answer: Most of the directly relevant papers were at the (formerly joint with SPIE) IS&T Electronic Imaging conference, because I think it’s the most suitable venue for this kind of computational photography (rather than image processing) work.

CM: “Whats the algorithmic contribution, why didn’t you show us code instead of talking about hacking cameras and history.”

Actual answer: Because the context matters and the implementation details don’t.

Because this is a systems project, and the code in this work exists only as an existential proof that the techniques can be implemented.

Asking about the four pages of nested conditional loops to get the right data in the right place at the right time that are the heart of my code is like asking what kind of hammers I used to drive the nails in a carpentry project.

The best code is code that you don’t have to think about the implementation details of, because it does what it says on the box. Thinking about the details of the code generally means it’s either not doing what it’s supposed to, or you had to do something heinous to make it work, which is about to become a maintenance burden if anyone ever looks at it again.

Provided Answer: The code in this work exists only as an existential proof that the techniques can be implemented – There is a sophisticated algorithm to do the image integration, but looking at 4 pages of nested conditional loops isn’t interesting, what they do is. (…yeah, I kind of got honest on that one).

CM: “I see you aren’t the fist author on a lot of the related papers, which of them are in your thesis?”

Actual Answer: We’ve already established that I like and respect building and documenting tools, I neither like nor respect anything about academic publishing, so no, I was not the first author on most of these papers that I built the apparatus for.

This isn’t a compilation thesis, I didn’t lift any large pieces of text I didn’t write myself into the document – there are a few phrases and figures in common, and one recent paper I was first author on is essentially built out of a section that I was convinced had standalone value, but again, no, I didn’t include anything I didn’t produce. Are you fucking serious?

Provided Answer: This isn’t a compilation thesis, I didn’t lift any text I didn’t write myself into the document – there are a few passages and figures in common, and one recent paper was essentially built out of a chapter that I was convinced had standalone value, but no, I didn’t include anything I didn’t produce.

Extended timeline and various irritations aside, I’m done now, I’m pretty happy with the result, and UK’s ECE department is starting a search for a Lecturer position to do exactly what I’ve been doing for the department, for which I am the model of the preferred candidate, so this is all turning out as desired.